Topics: Data sonification, mapValue() and mapScale(), Kepler, Python strings, music from text, Guido d’Arezzo, nested loops, file input/output, Python while loop, big data, biosignal sonification, defining functions, image sonification, Python images, visual soundscapes.

Sonification allows us to capture and better experience phenomena that are outside our sensory range by mapping values into sound structures that we can perceive by listening to them. Data for sonification may come from any measurable vibration or fluctuation, such as planetary orbits, magnitudes of earthquakes, positions of branches on a tree, lengths of words in this chapter, and so on. More information is provided in the reference textbook.

Here is code from this chapter:

- Sonifying planetary data

- Making music from text

- Recreating Guido d’Arezzo’s “Word Music” (ca. 1000)

- Sonifying biosignals

- Sonifying images

Sonifying planetary data

In 1619 Johannes Kepler wrote his “Harmonices Mundi (Harmonies of the World)” book (Kepler, 1619).

This code sample (Ch. 7, p. 196) sonifies one aspects of the celestial organization of planets. In particular, it converts the orbital velocities of the planets to musical notes.

# harmonicesMundi.py

#

# Sonify mean planetary velocities in the solar system.

#

from music import *

# create a list of planet mean orbital velocities

# Mercury, Venus, Earth, Mars, Ceres, Jupiter,

# Saturn, Uranus, Neptune, Pluto

planetVelocities = [47.89, 35.03, 29.79, 24.13, 17.882, 13.06,

9.64, 6.81, 5.43, 4.74]

# get minimum and maximum velocities:

minVelocity = min(planetVelocities)

maxVelocity = max(planetVelocities)

# calculate pitches

planetPitches = [] # holds list of sonified velocities

planetDurations = [] # holds list of durations

for velocity in planetVelocities:

# map a velocity to pitch and save it

pitch = mapScale(velocity, minVelocity, maxVelocity, C1, C6,

CHROMATIC_SCALE)

planetPitches.append( pitch )

planetDurations.append( EN ) # for now, keep duration fixed

# create the planet melodies

melody1 = Phrase(0.0) # starts at beginning

melody2 = Phrase(10.0) # starts 10 beats into the piece

melody3 = Phrase(20.0) # starts 20 beats into the piece

# create melody 1 (theme)

melody1.addNoteList(planetPitches, planetDurations)

# melody 2 starts 10 beats into the piece and

# is elongated by a factor of 2

melody2 = melody1.copy()

melody2.setStartTime(10.0)

Mod.elongate(melody2, 2.0)

# melody 3 starts 20 beats into the piece and

# is elongated by a factor of 4

melody3 = melody1.copy()

melody3.setStartTime(20.0)

Mod.elongate(melody3, 4.0)

# repeat melodies appropriate times, so they will end together

Mod.repeat(melody1, 8)

Mod.repeat(melody2, 3)

# create parts with different instruments and add melodies

part1 = Part("Eighth Notes", PIANO, 0)

part2 = Part("Quarter Notes", FLUTE, 1)

part3 = Part("Half Notes", TRUMPET, 3)

part1.addPhrase(melody1)

part2.addPhrase(melody2)

part3.addPhrase(melody3)

# finally, create, view, and write the score

score = Score("Celestial Canon")

score.addPart(part1)

score.addPart(part2)

score.addPart(part3)

View.sketch(score)

Play.midi(score)

Write.midi(score, "harmonicesMundi.mid")

It plays this sound:

Making music from text

This code sample (Ch. 7, p. 202) demonstrates how to generate music from text. This program converts the values of ASCII characters to MIDI pitches. For variety, note durations are randomized; other note properties (volume, etc.) are the same for all notes.

# textMusic.py

#

# It demonstrates one way to generate music from text.

#

# * Note pitch is based on the ASCII value of characters.

# * Note duration is selected from a weighted-probability list

# (again, based on ASCII value).

# * Note dynamic is selected randomly.

#

# All other music parameters (panoramic, instrument, etc.)

# are kept constant.

from music import *

from random import *

# Define text to sonify.

# Excerpt from Herman Melville's "Moby-Dick", Epilogue (1851)

text = """The drama's done. Why then here does any one step forth? - Because one did survive the wreck. """

##### define the data structure

textMusicScore = Score("Moby-Dick melody", 130)

textMusicPart = Part("Moby-Dick melody", GLOCK, 0)

textMusicPhrase = Phrase()

# create durations list (factors correspond to probability)

durations = [HN] + [QN]*4 + [EN]*4 + [SN]*2

##### create musical data

for character in text: # loop enough times

value = ord(character) # convert character to ASCII number

# map printable ASCII values to a pitch value

pitch = mapScale(value, 32, 126, C3, C6, PENTATONIC_SCALE, C2)

# map printable ASCII values to a duration value

index = mapValue(value, 32, 126, 0, len(durations)-1)

duration = durations[index]

print "value", value, "becomes pitch", pitch,

print "and duration", duration

dynamic = randint(60, 120) # get a random dynamic

note = Note(pitch, duration, dynamic) # create note

textMusicPhrase.addNote(note) # and add it to phrase

# now, all characters have been converted to notes

# add ending note (same as last one - only longer)

note = Note(pitch, WN)

textMusicPhrase.addNote(note)

##### combine musical material

textMusicPart.addPhrase(textMusicPhrase)

textMusicScore.addPart(textMusicPart)

##### view score and write it to a MIDI file

View.show(textMusicScore)

Play.midi(textMusicScore)

Write.midi(textMusicScore, "textMusic.mid")

It plays this sound:

Recreating Guido d’Arezzo’s “Word Music” (ca. 1000)

One of the oldest known algorithmic music processes is a rule-based algorithm that selects each note based on the letters in a text, credited to Guido d’Arezzo (991 – 1033).

This code sample (Ch. 7, p. 207) is an approximation to d’Arezzo’s algorithm, adapted to text written in ASCII.

# guidoWordMusic.py

#

# Creates a melody from text using the following rules:

#

# 1) Vowels specify pentatonic pitch, 'a' is C4, 'e' is D4,

# 'i' is E4, 'o' is G4, and 'u' is A4.

#

# 2) Consonants are ignored, but contribute to note duration of

# all vowels within a word (if any).

#

from music import *

from string import *

# this is the text to be sonified

text = """One of the oldest known algorithmic music processes is a rule-based algorithm that selects each note based on the letters in a text, credited to Guido d'Arezzo."""

text = lower(text) # convert string to lowercase

# define vowels and corresponding pitches (parallel sequences),

# i.e., first vowel goes with first pitch, and so on.

vowels = "aeiou"

vowelPitches = [C4, D4, E4, G4, A4]

# define consonants

consonants = "bcdfghjklmnpqrstvwxyz"

# define parallel lists to hold pitches and durations

pitches = []

durations = []

# factor used to scale durations

durationFactor = 0.1 # higher for longer durations

# separate text into words (using space as delimiter)

words = split( text )

# iterate through every word in the text

for word in words:

# iterate through every character in this word

for character in word:

# is this character a vowel?

if character in vowels:

# yes, so find its position in the vowel list

index = find(vowels, character)

# and use position to find the corresponding pitch

pitch = vowelPitches[index]

# finally, remember this pitch

pitches.append( pitch )

# create duration from the word length

duration = len( word ) * durationFactor

# and remember it

durations.append( duration )

# now, pitches and durations have been created

# so, add them to a phrase

melody = Phrase()

melody.addNoteList(pitches, durations)

# view and play melody

View.notation(melody)

Play.midi(melody)

It plays this sound:

Sonifying biosignals

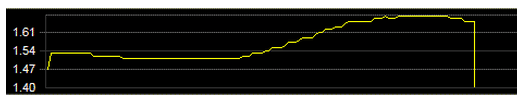

Here we explore pre-processing and sonification of data from biological processes. The figure below displays heart data, captured by measuring blood pressure over time.

The figure below displays skin conductance, captured by measuring electrical conductivity between two fingers over time.

This code sample (Ch. 7, p. 217) explores one possible sonification of these data. Before running this program, download the complete data file in your JythonMusic folder.

# sonifyBiosignals.py

#

# Sonify skin conductance and heart data to pitch and dynamic.

#

# Sonification design:

#

# * Skin conductance is mapped to pitch (C3 - C6).

# * Heart value is mapped to a pitch variation (0 to 24).

# * Heart value is mapped to dynamic (0 - 127).

#

# NOTE: We quantize pitches to the C Major scale.

#

from music import *

from string import *

# first let's read in the data

data = open("biosignals.txt", "r")

# read and process every line

skinData = [] # holds skin data

heartData = [] # holds heart data

for line in data:

time, skin, heart = split(line) # extract the three values

skin = float(skin) # convert from string to float

heart = float(heart) # convert from string to float

skinData.append(skin) # keep the skin data

heartData.append(heart) # keep the heart data

# now, heartData contains all the heart values

data.close() # done, so let's close the file

##### define the data structure

biomusicScore = Score("Biosignal sonification", 150)

biomusicPart = Part(PIANO, 0)

biomusicPhrase = Phrase()

# let's find the range extremes

heartMinValue = min(heartData)

heartMaxValue = max(heartData)

skinMinValue = min(skinData)

skinMaxValue = max(skinData)

# let's sonify the data

i = 0; # point to first value in data

while i < len(heartData): # while there are more values, loop

# map skin-conductance to pitch

pitch = mapScale(skinData[i], skinMinValue, skinMaxValue, C3, C6,

MAJOR_SCALE, C4)

# map heart data to a variation of pitch

pitchVariation = mapScale(heartData[i], heartMinValue,

heartMaxValue, 0, 24, MAJOR_SCALE, C4)

# also map heart data to dynamic

dynamic = mapValue(heartData[i], heartMinValue, heartMaxValue,

0, 127)

# finally, combine pitch, pitch variation, and dynamic into note

note = Note(pitch + pitchVariation, TN, dynamic)

# add it to the melody so far

biomusicPhrase.addNote(note)

# point to next value in heart and skin data

i = i + 1

# now, biomusicPhrase contains all the sonified values

##### combine musical material

biomusicPart.addPhrase(biomusicPhrase)

biomusicScore.addPart(biomusicPart)

##### view score and write it to a MIDI file

View.sketch(biomusicScore)

Write.midi(biomusicScore, "sonifyBiosignals.mid")

Play.midi(biomusicScore)

It plays this sound:

Sonifying images

This code sample (Ch. 7, p. 231) demonstrates how to sonify (generate music from) images. It sonifies the following image:

Before running this program, download this image in your JythonMusic folder.

Here is the code:

# sonifyImage.py

#

# Demonstrates how to create a soundscape from an image.

# It also demonstrates how to use functions.

# It loads a jpg image and scans it from left to right.

# Pixels are mapped to notes using these rules:

#

# * left-to-right column position is mapped to time,

# * luminosity (pixel brightness) is mapped to pitch within a scale,

# * redness (pixel R value) is mapped to duration, and

# * blueness (pixel B value) is mapped to volume.

#

from music import *

from image import *

from random import *

##### define data structure

soundscapeScore = Score("Loutraki Soundscape", 60)

soundscapePart = Part(PIANO, 0)

##### define musical parameters

scale = MIXOLYDIAN_SCALE

minPitch = 0 # MIDI pitch (0-127)

maxPitch = 127

minDuration = 0.8 # duration (1.0 is QN)

maxDuration = 6.0

minVolume = 0 # MIDI velocity (0-127)

maxVolume = 127

# start time is randomly displaced by one of these

# durations (for variety)

timeDisplacement = [DEN, EN, SN, TN]

##### read in image (origin (0, 0) is at top left)

image = Image("soundscapeLoutrakiSunset.jpg")

# specify image pixel rows to sonify - this depends on the image!

pixelRows = [0, 53, 106, 159, 212]

width = image.getWidth() # get number of columns in image

height = image.getHeight() # get number of rows in image

##### define function to sonify one pixel

# Returns a note from sonifying the RGB values of 'pixel'.

def sonifyPixel(pixel):

red, green, blue = pixel # get pixel RGB value

luminosity = (red + green + blue) / 3 # calculate brightness

# map luminosity to pitch (the brighter the pixel, the higher

# the pitch) using specified scale

pitch = mapScale(luminosity, 0, 255, minPitch, maxPitch, scale)

# map red value to duration (the redder the pixel, the longer

# the note)

duration = mapValue(red, 0, 255, minDuration, maxDuration)

# map blue value to dynamic (the bluer the pixel, the louder

# the note)

dynamic = mapValue(blue, 0, 255, minVolume, maxVolume)

# create note and return it to caller

note = Note(pitch, duration, dynamic)

# done sonifying this pixel, so return result

return note

##### create musical data

# sonify image pixels

for row in pixelRows: # iterate through selected rows

for col in range(width): # iterate through all pixels on this row

# get pixel at current coordinates (col and row)

pixel = image.getPixel(col, row)

# sonify this pixel (we get a note)

note = sonifyPixel(pixel)

# wrap note in a phrase to give it a start time

# (Phrases have start time, Notes do not)

# use column value as note start time (e.g., 0.0, 1.0, and so on)

startTime = float(col) # phrase start time is a float

# add some random displacement for variety

startTime = startTime + choice( timeDisplacement )

phrase = Phrase(startTime) # create phrase with given start time

phrase.addNote(note) # and put the note in it

# put result in part

soundscapePart.addPhrase(phrase)

# now, all pixels on this row have been sonified

# now, all pixelRows have been sonified, and soundscapePart

# contains all notes

##### combine musical material

soundscapeScore.addPart(soundscapePart)

##### view score and write it to an audio and MIDI files

View.sketch(soundscapeScore)

Write.midi(soundscapeScore, "soundscapeLoutrakiSunset.mid")

It plays this sound:

More examples of image sonification

“Daintree Drones” is another example of image sonification to generate a circular piece – by Kenneth Hanson.

This is from a Computing in the Arts student exhibit, entitled “Visual Soundscapes“, which was funded in part by the National Science Foundation (DUE #1044861).

Other examples from the exhibit are available here.